SQL Server Database Index optimization – maintenance plan

Properly optimized table indexes are crucial in database performance tuning. This is especially true when the database is growing overtime causing indexes to be fragmented. The more an index is fragmented, the longer time is needed for database reads.

When should the indexes be rebuilt? Microsoft recommends to reorganize indexes when fragmentation level is between 5% and 30%. When fragmentation is above 30% then the index should be rebuilt.

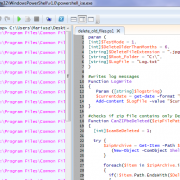

The best way of ensuring low fragmentation levels is to setup maintenance plan for the database that will be triggered periodically (preferably at night). The script below can be used in scheduled job. You can use @PrintInformationOnly param to print the index information first, for all database tables. When you set it to 0, the actual rebuild or reorganize process will start. I have also added @ExcludeTableList to exclude some tables that should be skipped (for example are too big or not used).

DECLARE @return_value int EXEC @return_value = [dbo].[IndexOptimize] @databaseName = N'myDatabase', @IndexFragmentationMin = 5, @IndexFragmentationRebuildMin = 30, @PrintInformationOnly = 1 SELECT 'Return Value' = @return_value

The actual script starts here. You may want to adjust FILLFACTOR params separately for each tables as this should be configured depending on data update frequency. By setting MAXDOP= 1 param, we disable parallel threads during rebuild process, so the server CPU will not go to high.

I have successfully tested this script on database of size about 150 GB. Be aware that initial index rebuild may take a few hours, depending on fragmentation levels.

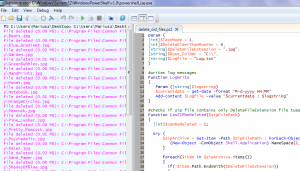

CREATE PROCEDURE [dbo].[IndexOptimize]

@databaseName varchar(200) = 'MyTable',

@IndexFragmentationMin int = 5,

@IndexFragmentationRebuildMin int = 30,

@skipLargeTables bit = 0,

@ExcludeTableList nvarchar(max) = 'MyBigTable1,MyBigTable2',

@PrintInformationOnly bit = 0

AS

BEGIN

BEGIN TRY

declare @dbid int = 0;

set @dbid = (select [dbid] from [Master].dbo.[sysdatabases] where name = @databaseName);

------------------

DECLARE @Schema VARCHAR(200);

DECLARE @Table NVARCHAR(200);

DECLARE @Index VARCHAR(200);

DECLARE @avg_fragmentation_in_percent int;

DECLARE @page_count int;

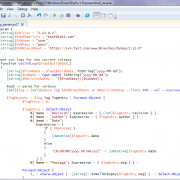

DECLARE db_cursor CURSOR FOR

SELECT

dbschemas.[name] as 'Schema',

dbtables.[name] as 'Table',

dbindexes.[name] as 'Index',

indexstats.avg_fragmentation_in_percent,

indexstats.page_count

FROM sys.dm_db_index_physical_stats (@dbid, NULL, NULL, NULL, NULL) AS indexstats

INNER JOIN sys.tables dbtables on dbtables.[object_id] = indexstats.[object_id]

INNER JOIN sys.schemas dbschemas on dbtables.[schema_id] = dbschemas.[schema_id]

INNER JOIN sys.indexes AS dbindexes ON dbindexes.[object_id] = indexstats.[object_id]

AND indexstats.index_id = dbindexes.index_id

WHERE dbindexes.[name] is not null and indexstats.database_id = @dbid

ORDER BY indexstats.avg_fragmentation_in_percent desc

OPEN db_cursor

FETCH NEXT FROM db_cursor INTO @Schema, @Table, @Index, @avg_fragmentation_in_percent, @page_count

WHILE @@FETCH_STATUS = 0

BEGIN

if(@skipLargeTables = 1 and CHARINDEX(@Table, @ExcludeTableList) > 0)

begin

print 'skipped index (' + CONVERT(varchar(10),@avg_fragmentation_in_percent) + '%) ' + @Index + ' for table ' + @Table + ', page count ' + CONVERT(varchar(10),@page_count)

end

else

begin

if(@avg_fragmentation_in_percent <= @IndexFragmentationRebuildMin and @avg_fragmentation_in_percent > @IndexFragmentationMin and LEN(@Index) > 3)

begin

print 'reorganizing index (' + CONVERT(varchar(10),@avg_fragmentation_in_percent) + '%) ' + @Index + ' for table ' + @Table + ', page count ' + CONVERT(varchar(10),@page_count)

if(@PrintInformationOnly = 0)

begin

exec('ALTER INDEX ' + @Index + ' ON dbo.' + @Table + ' REORGANIZE;');

end

end

if(@avg_fragmentation_in_percent > @IndexFragmentationRebuildMin and LEN(@Index) > 3)

begin

print 'rebuilding index (' + CONVERT(varchar(10),@avg_fragmentation_in_percent) + '%) ' + @Index + ' for table ' + @Table + ', page count ' + CONVERT(varchar(10),@page_count)

if(@PrintInformationOnly = 0)

begin

exec('ALTER INDEX ' + @Index + ' ON dbo.' + @Table + ' REBUILD WITH (MAXDOP=1,FILLFACTOR = 90)');

end

end

end

FETCH NEXT FROM db_cursor INTO @Schema, @Table, @Index, @avg_fragmentation_in_percent, @page_count

END

CLOSE db_cursor

DEALLOCATE db_cursor

END TRY

BEGIN CATCH

DECLARE @ErrorMessage NVARCHAR(4000);

DECLARE @ErrorSeverity INT;

DECLARE @ErrorState INT;

SELECT @ErrorMessage = 'Message: '+ ERROR_MESSAGE() + CHAR(13) + CHAR(10) +

'Procedure: ' + CAST(ERROR_PROCEDURE() AS VARCHAR(MAX)) + CHAR(13) + CHAR(10) +

'Line: ' + CAST(ERROR_LINE() AS VARCHAR(MAX)) + CHAR(13) + CHAR(10) +

'Number: ' + CAST(ERROR_NUMBER() AS VARCHAR(MAX)), @ErrorSeverity = ERROR_SEVERITY(), @ErrorState = ERROR_STATE();

RAISERROR (@ErrorMessage, @ErrorSeverity, @ErrorState);

RETURN 0

END CATCH

END

(1 votes, average: 4.00 out of 5)

(1 votes, average: 4.00 out of 5)