Using Windows Azure Service Management REST API in automated tasks

Windows Azure platform gives us a lot of new possibilities starting from the ability to auto scale instances of the deployed application to performing an on demand automated changes. When handling multiple applications deployed to the cloud there is a need to automate daily processes in order to save the development time.

In this article I will show you how to automate process of creating new cloud service using Windows Azure REST API. In our example we will create custom Api helper to instantiate our request object that will be then used to invoke the Azure RestFul API procedure.

In order to access WA Api the Azure subscription password and user name is not required, all you need is the subscription ID and the management certificate. This creates the possibility to give some administrative tasks to other people in the company not necessarily having access to the subscription account.

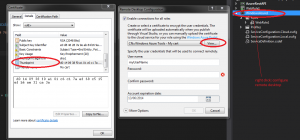

First thing to do is to create and upload management certificate into WA Management Portal. One of the ways to create certificate is to do it from within Visual Studio. In order to do that, we need to right click on our cloud project and open remote desktop configuration wizard. Next we need to select “create new” from the menu. After our certificate is created we can view it and export it to the .cer file. At this stage we also need to read the certificate’s thumb-print that will be used to find it in the local store.

The image below shows the process of configuring new RDP connection and creating new certificate

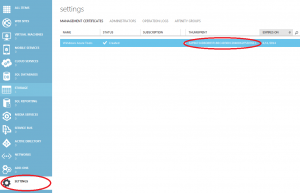

After we have created and exported certificate to the file, we can upload it to the WA Management Portal as shown below

Please note that certificate thumb-print is the same as our local one.

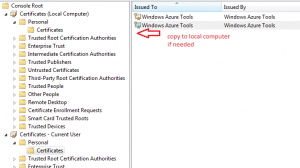

We also need to make sure that our Api helper will find the certificate in our local store. In order to check it’s location, please open Windows Management Console (mmc) and add snap-in for the current user and local computer certificates. Next you need to copy it as depicted below

At this stage we can start implementing our Api request helper. Let’s create custom PayLoadSettings class first that we will use to hold the basic request settings.

public class PayLoadSettings

{

public string CloudServiceUrlFormat { get; set; }

public string SubscriptionId { get; set; }

public string Thumbprint { get; set; }

public string ServiceName { get; set; }

public string Label { get; set; }

public string Description { get; set; }

public string Location { get; set; }

public string AffinityGroup { get; set; }

public string VersionId { get; set; }

}

Next let’s create function that retrieves our newly created (and uploaded to the WAM portal) certificate from the local machine store

/// <summary>

/// Get certificate from the local machine by thumbprint

/// </summary>

/// <returns></returns>

private X509Certificate2 GetX509Certificate()

{

X509Certificate2 x509Certificate = null;

var certStore = new X509Store(StoreName.My, StoreLocation.LocalMachine);

try

{

certStore.Open(OpenFlags.ReadOnly);

var x509CertificateCollection = certStore.Certificates.Find(X509FindType.FindByThumbprint, this.PayLoadSettings.Thumbprint, false);

x509Certificate = x509CertificateCollection[0];

}

finally

{

certStore.Close();

}

return x509Certificate;

}

Next, we want to create function that inserts our cert into new request object to be sent to execute remote action. We also need to set the requested Api version (not required though).

/// <summary>

/// Create http request object with the certificate added

/// </summary>

/// <param name="uri"></param>

/// <param name="httpWebRequestMethod"></param>

/// <returns></returns>

private HttpWebRequest CreateHttpWebRequest(Uri uri, string httpWebRequestMethod)

{

var x509Certificate = GetX509Certificate();

var httpWebRequest = (HttpWebRequest)HttpWebRequest.Create(uri);

httpWebRequest.Method = httpWebRequestMethod;

httpWebRequest.Headers.Add("x-ms-version", this.PayLoadSettings.VersionId);

httpWebRequest.ClientCertificates.Add(x509Certificate);

httpWebRequest.ContentType = "application/xml";

return httpWebRequest;

}

Next step is to create payload document object containing the operation parameters that we want to execute. The names are self-explanatory.

/// <summary>

/// Create payload document

/// </summary>

/// <returns></returns>

private XDocument CreatePayload()

{

var base64LabelName = Convert.ToBase64String(Encoding.UTF8.GetBytes(this.PayLoadSettings.Label));

var xServiceName = new XElement(azureNamespace + "ServiceName", this.PayLoadSettings.ServiceName);

var xLabel = new XElement(azureNamespace + "Label", base64LabelName);

var xDescription = new XElement(azureNamespace + "Description", this.PayLoadSettings.Description);

var xLocation = new XElement(azureNamespace + "Location", this.PayLoadSettings.Location);

var xAffinityGroup = new XElement(azureNamespace + "AffinityGroup", this.PayLoadSettings.AffinityGroup);

var createHostedService = new XElement(azureNamespace + "CreateHostedService");

createHostedService.Add(xServiceName);

createHostedService.Add(xLabel);

createHostedService.Add(xDescription);

createHostedService.Add(xLocation);

createHostedService.Add(xAffinityGroup);

var payload = new XDocument();

payload.Add(createHostedService);

payload.Declaration = new XDeclaration("1.0", "UTF-8", "no");

return payload;

}

Having payload document created, we can send our request and retrieve request id if operation is successful.

/// <summary>

/// Invoke Api operation by sending payload object

/// </summary>

/// <param name="uri"></param>

/// <param name="payload"></param>

/// <returns></returns>

private string InvokeAPICreateRequest(string uri, XDocument payload)

{

string requestId;

var operationUri = new Uri(uri);

var httpWebRequest = CreateHttpWebRequest(operationUri, "POST");

using (var requestStream = httpWebRequest.GetRequestStream())

{

using (var streamWriter = new StreamWriter(requestStream, UTF8Encoding.UTF8))

{

payload.Save(streamWriter, SaveOptions.DisableFormatting);

}

}

using (var response = (HttpWebResponse)httpWebRequest.GetResponse())

{

requestId = response.Headers["x-ms-request-id"];

}

return requestId;

}

The final function just puts it all together as follows

/// <summary>

/// Execute create cloud service request

/// </summary>

/// <returns></returns>

public string CreateCloudService()

{

var cloudServiceUrl = string.Format(this.PayLoadSettings.CloudServiceUrlFormat, this.PayLoadSettings.SubscriptionId);

var payload = CreatePayload();

var requestId = InvokeAPICreateRequest(cloudServiceUrl, payload);

return requestId;

}

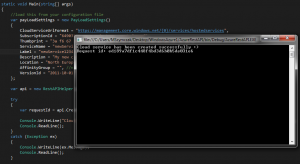

If we will invoke the code from the console, the code should look as below

static void Main(string[] args)

{

//load this from your configuration file

var payLoadSettings = new PayLoadSettings()

{

CloudServiceUrlFormat = "https://management.core.windows.net/{0}/services/hostedservices",

SubscriptionId = "92533879-88c9-41fe-b24e-5251bcf49a8f",//fake subscription id - please provide yours

Thumbprint = "3a f6 67 24 d8 d8 b3 71 b0 c4 d3 00 c2 04 0d 62 e5 30 76 1c", //fake cert thumbprint - please provide yours

ServiceName = "newService1234567",//name your new service

Label = "newService1234567", //give it a tracking label

Description = "My new cloud service", //service description

Location = "North Europe",//select centre

AffinityGroup = "", //not created yet

VersionId = "2011-10-01"//api version

};

var api = new RestAPIHelper(payLoadSettings);

try

{

var requestId = api.CreateCloudService();

Console.WriteLine("Cloud service has been created successfully :)" + Environment.NewLine + "Request id: " + requestId);

Console.ReadLine();

}

catch (Exception ex)

{

Console.WriteLine(ex.Message);

Console.ReadLine();

}

}

Let’s run the console application now

After executing above we can check in WA Management Portal if the cloud service is created. This should look like image below

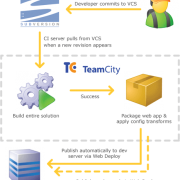

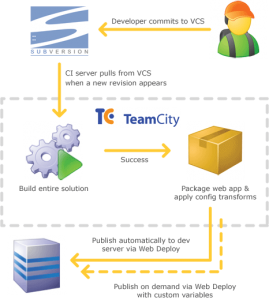

I have attached project files for your tests. Please note that you need to set your own configuration settings for it to be working. You can also use above example to create your own automated tasks for Windows Azure – simply implement other operations in similar way. You can then use for example TeamCity to run it automatically when needed. This gives you a lot of possibilities and simply saves your precious development time.