Auto publishing reports to Tableau Server

Tableau it’s a great tool for data visualization, however if you are using it a lot, you may want to automate some stuff. One of them is publishing/updating reports to Tableau Server. This is when Tableau Utility Command comes in handy, you can install it on your development server and use in the power-shell script. One of the solutions is to use CI server for auto deployments, you only need to give the git/svn access for users changing the reports or adding new ones.

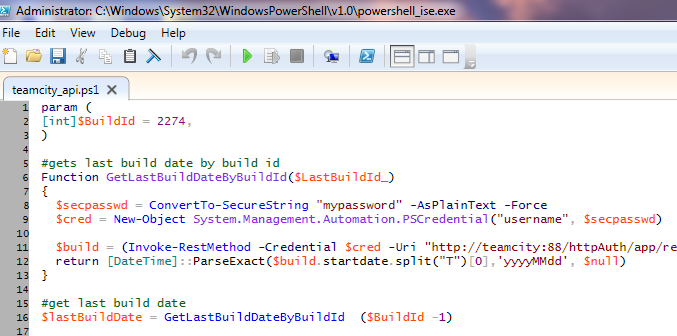

Following script can be run as build step in TeamCity to detect workbooks that have been changed recently and publish them automatically to Tableau server. Parent folder of each workbook will be used as project name when publishing. In order to run it, just pass in email notification list and server password – of course you need to configure the params (server url, smtp etc.).

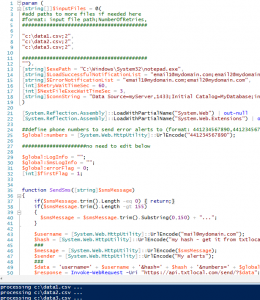

param (

[string]$cmddir = "C:\Program Files\Tableau\Tableau Server\8.2\extras\Command Line Utility", #location where tabcmd has been installed

[string]$server = "https://tableau:81", #this is url of the Tableau server

[string]$currentDir = (split-path -parent $MyInvocation.MyCommand.Definition) +"\", #current script location

[string]$notificationEmailList = "test1@test.com,test2@test.com", #send email notifications if successful

[string]$admin = "user", #admin account for the server

[string]$pass = "" #to be passed in as param

)

function SendEmail($emailTo,$title,$body)

{

$smtp=new-object Net.Mail.SmtpClient("my_smtp_server"); $smtp.Send("sentAs@mydomain.com", $emailTo, $title, $body);

}

$global:temp_ = "";

#login to Tableau

cd $cmddir

.\tabcmd login -s $server -u $admin -p $pass

get-childitem -Path $currentDir –recurse | where-object {

$_.LastWriteTime -gt (get-date).AddMinutes(-10) -and $_.FullName.EndsWith(".twb")

} |

Foreach-Object {

[string]$projectName = [System.IO.DirectoryInfo]$_.Directory.Name;

$global:temp_ += [string][System.IO.Path]::GetFileName($_.FullName) + " | ";

#publish or overwrite workbook on the server

.\tabcmd publish $_.FullName -r $projectName -o

}

#more commands

#.\tabcmd publish "workbook.twbx" -r "project name" -n "Workbook Name" --db-user "" --db-password "" -o

#log out to release the session

.\tabcmd logout

if(-not $global:temp_ -eq "")

{

SendEmail $notificationEmailList "Tableau report published" "Following report(s) has just been successfully published to Tableau Server: $global:temp_"

}

enjoy!